Bridging the Gap between

Real World and Simulation

Perception and Action

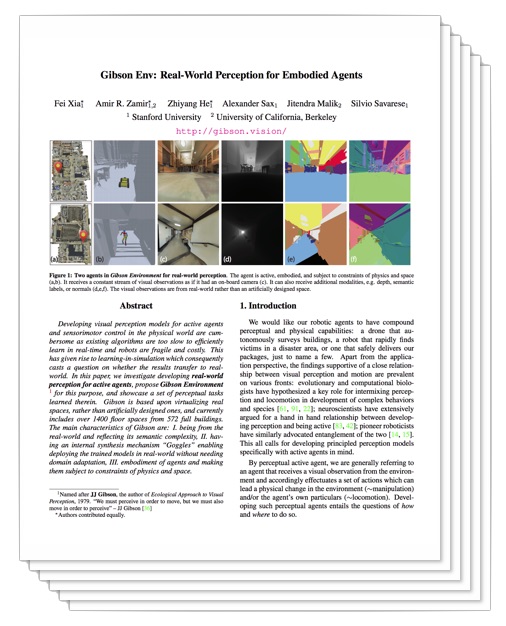

You shouldn't play video games all day, so shouldn't your AI! Gibson is a virtual environment based off of real-world, as opposed to games or artificial environments, to support learning perception. Gibson enables developing algorithms that explore both perception and action hand in hand.

Overview

To learn more, see the CVPR talk, the overview video below, the paper, or visit our database page or Github.

Gibson Environment is named after James J. Gibson, the author of Ecological Approach to Visual Perception, 1979. Read a relevant excerpt of JJ Gibson's book here. “We must perceive in order to move, but we must also move in order to perceive” – JJ Gibson.

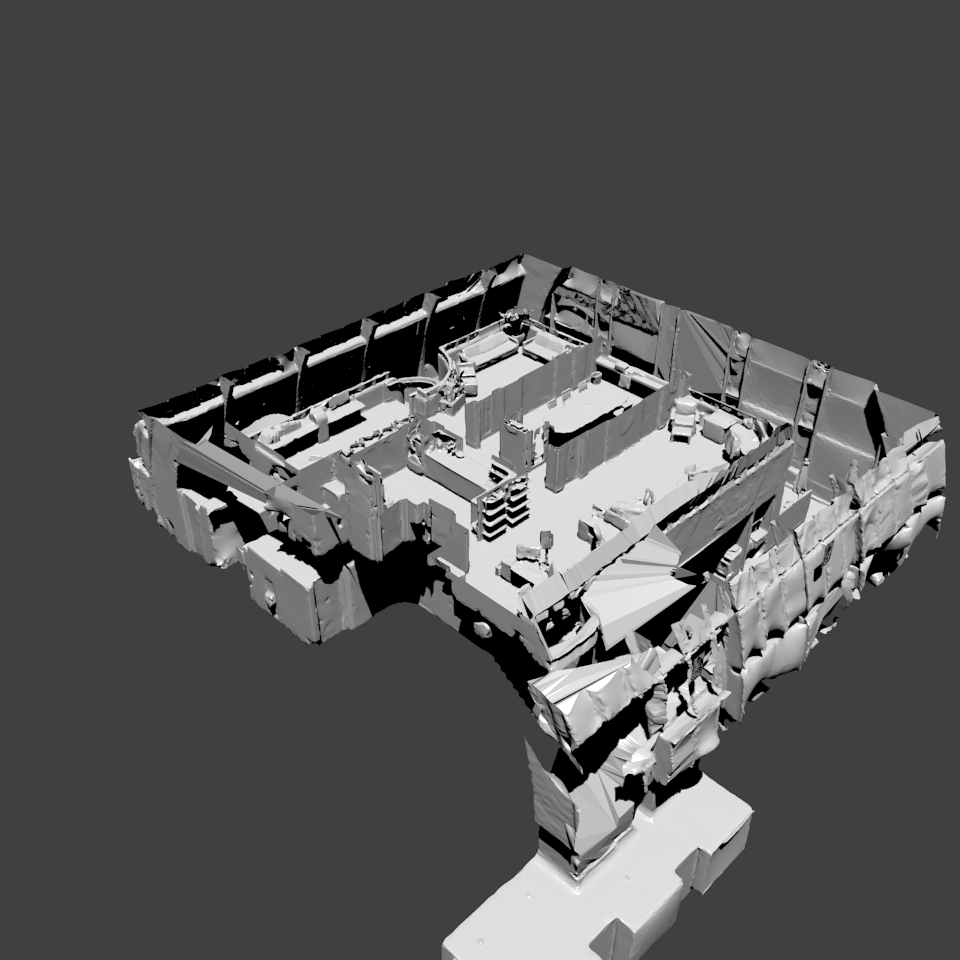

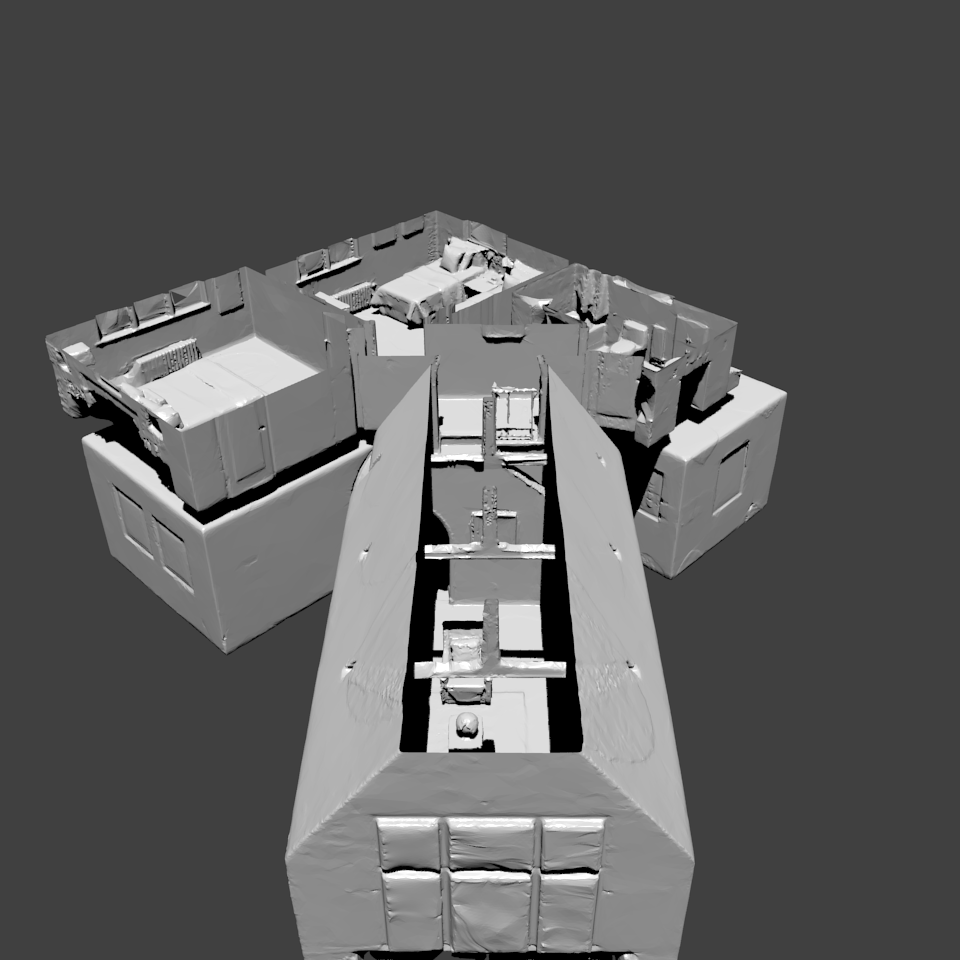

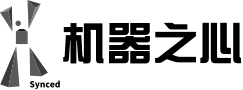

Use our opensource platform to explore active and real-world perception. Above: two agents in Gibson. The agents are active, embodied, and subject to constraints of physics and space (a,b). They receive a constant stream of visual observations as if they had an on-board camera (c). They can also receive additional modalities, e.g. depth, semantic labels, or normals (d,e,f). The visual observations are from real scanned buildings.

Gibson Platform

Gibson Environment for Real-World Perception Learning. We are opensourced on Github. Check it out and train perceptual agents.

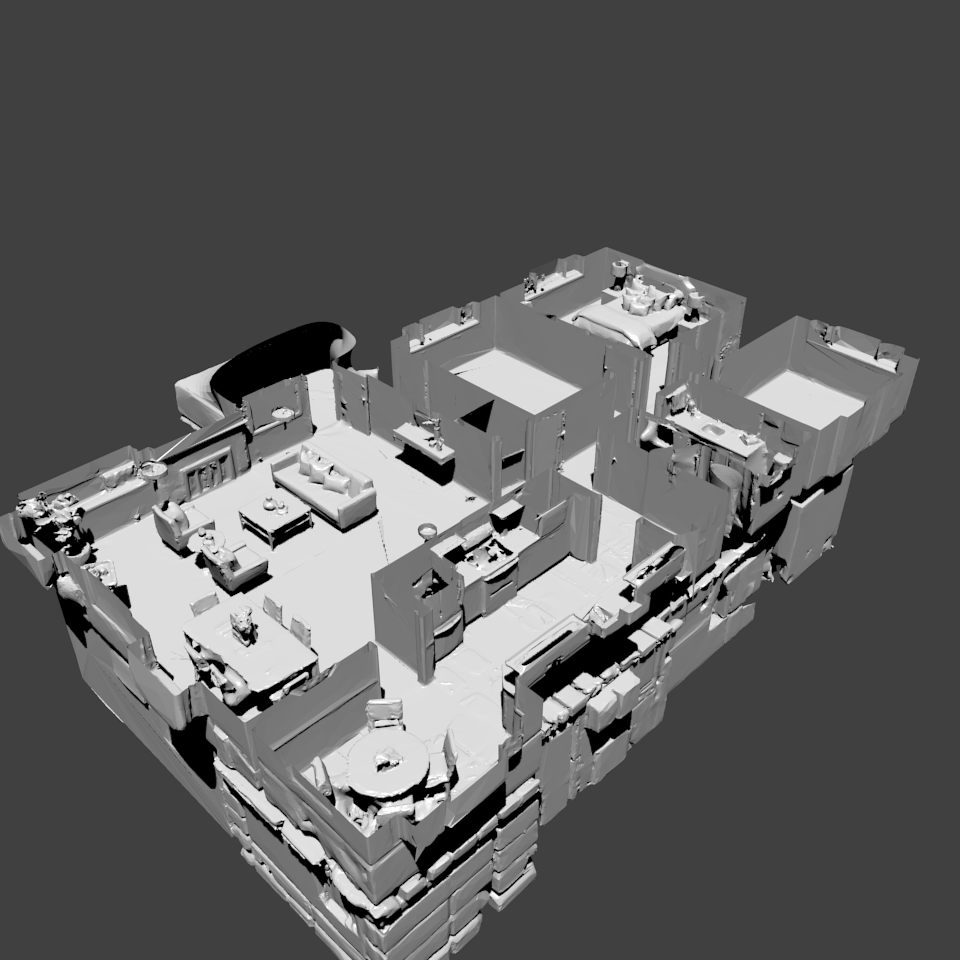

Model Database

Over 1400 floor spaces and 572 full buildings, scanned using RGBD cameras. A State-of-the-art 3D database.

How

Read more about our neural network based view synthesis pipeline, physics integration, and experimental results.

Transfer to Real World

We propose a synthesis mechanism called "Goggle" for closing the remaining perceptual gap between the virtual environment and real-world.

Checkout our database page for the state-of-the-art 3D model dataset.

Team

Paper

CVPR 2018. [Spotlight Oral][NVIDIA Pioneering Research Award]

F. Xia*, A. Zamir*, Z. He*, S. Sax, J. Malik, S. Savarese.

(*equal contribution)

[Paper] [Supplementary] [Code] [Bibtex] [Slides]

Model: Bohemia (Click Me)

Model: Bohemia (Click Me)